The Definitive Guide to Question Mining

How elite teams discover, prioritize, and own the questions that drive AEO, trust, and revenue

There is a skill quietly separating the marketing teams that feel ahead from the ones that feel like they are constantly reacting.

It is not prompt engineering.

It is not publishing more.

It is not another SEO tactic.

It is a moat.

A structural advantage built on one capability: understanding the real questions your market is asking and owning the answers that shape how those questions get resolved.

That skill is question mining.

And in an AI-first world, it is becoming the new market insights function.

Not as a department.

As infrastructure.

If you are responsible for how your company is explained, in messaging, in content, in sales conversations, and increasingly in AI-generated answers, this guide is for you.

For years, companies relied on research teams, surveys, positioning workshops, and quarterly insights decks. That model worked when discovery was slower and document-based.

It breaks when answers are synthesized in real time.

Today, visibility is determined less by who ranks and more by whose explanation gets reused.

AI-generated summaries now appear across a growing share of informational searches, and their footprint continues to expand. When answers are synthesized directly on the results page, discovery shifts from ranking documents to influencing explanations.

This shift is measurable. Across published industry analyses, queries that trigger AI summaries often see meaningful compression in organic click-through rates—frequently in the double digits, and in some cases 20–30%+ depending on intent, vertical, and SERP layout.

Ranking is no longer the moat.

Clarity is.

Search engines ranked documents.

Large language models assemble explanations.

When someone asks a question inside an AI interface, there is no page two. There is no “we’ll get them later.” There is one synthesized response built from the clearest available sources.

If your explanation cannot survive synthesis, it does not exist in that moment.

That is where question mining becomes infrastructure.

Done correctly, it feeds everything:

Messaging sharpens because it reflects real tension instead of invented differentiation.

Positioning strengthens because it mirrors how buyers actually compare options.

Content strategy becomes disciplined because it prioritizes decision-shaping uncertainty.

AEO improves because your explanations align with the exact questions AI systems are trying to resolve.

Sales enablement gets stronger because objections are handled before the call.

Product teams gain signal because recurring friction is visible, not anecdotal.

AEO is not the whole story.

It is the most visible proof of the shift.

AI systems make explanation quality measurable. They expose whether your thinking is clear enough to be reused. But the underlying capability is broader than AEO.

It is about building a machine that continuously captures, prioritizes, and operationalizes market uncertainty.

Most companies are not building that machine.

Most marketing teams are measuring output while losing influence.

They publish more.

They optimize keywords.

They track traffic.

Meanwhile, AI systems assemble explanations from whoever reduces uncertainty most clearly.

I have seen companies rank for thousands of keywords and still lose deals to the same three unanswered objections.

The problem was never volume.

It was ownership.

Keywords describe topics.

Questions expose uncertainty.

Uncertainty is where decisions happen.

“Headless CMS” is a topic.

“Is a headless CMS overkill if my marketing team needs to move fast?” is a decision.

Question mining is the discipline of systematically identifying the questions that determine whether someone chooses, hesitates, or walks away.

Most of those questions already exist inside your company:

In sales calls where deals stall.

In support tickets where confusion appears.

In reviews where regret surfaces.

In Reddit threads where buyers compare tools honestly.

In internal search logs where people type what they cannot find.

In AI prompts where buyers ask for synthesis instead of links.

Most teams have the signal.

Very few build the machine.

Building that machine manually is possible. It is also fragile.

Questions live across transcripts, tickets, reviews, analytics tools, AI prompts, and internal systems. Normalizing them. Clustering them. Qualifying which ones actually shape decisions. Refreshing them continuously.

That is not a spreadsheet exercise.

It is infrastructure.

Some companies build that layer internally. Others use platforms like AirOps to unify fragmented question signals, structure them into intent clusters, and operationalize them across content, messaging, and AI surfaces.

The implementation can vary.

The requirement does not.

The work is not collecting questions.

It is deciding which questions shape decisions and answering them so clearly that your explanation becomes the reference point across AI systems, search results, sales conversations, and internal alignment.

That is the moat.

Not more content.

Shared understanding at scale.

Note: Want to listen to an audio version of this guide created via NotebookLM? Check it out below.

01. The Answer Ownership System

Question mining is not a campaign.

It is operating discipline.

The goal is simple: ensure that the most important questions in your market are answered clearly, consistently, and repeatedly by your brand.

That requires a loop.

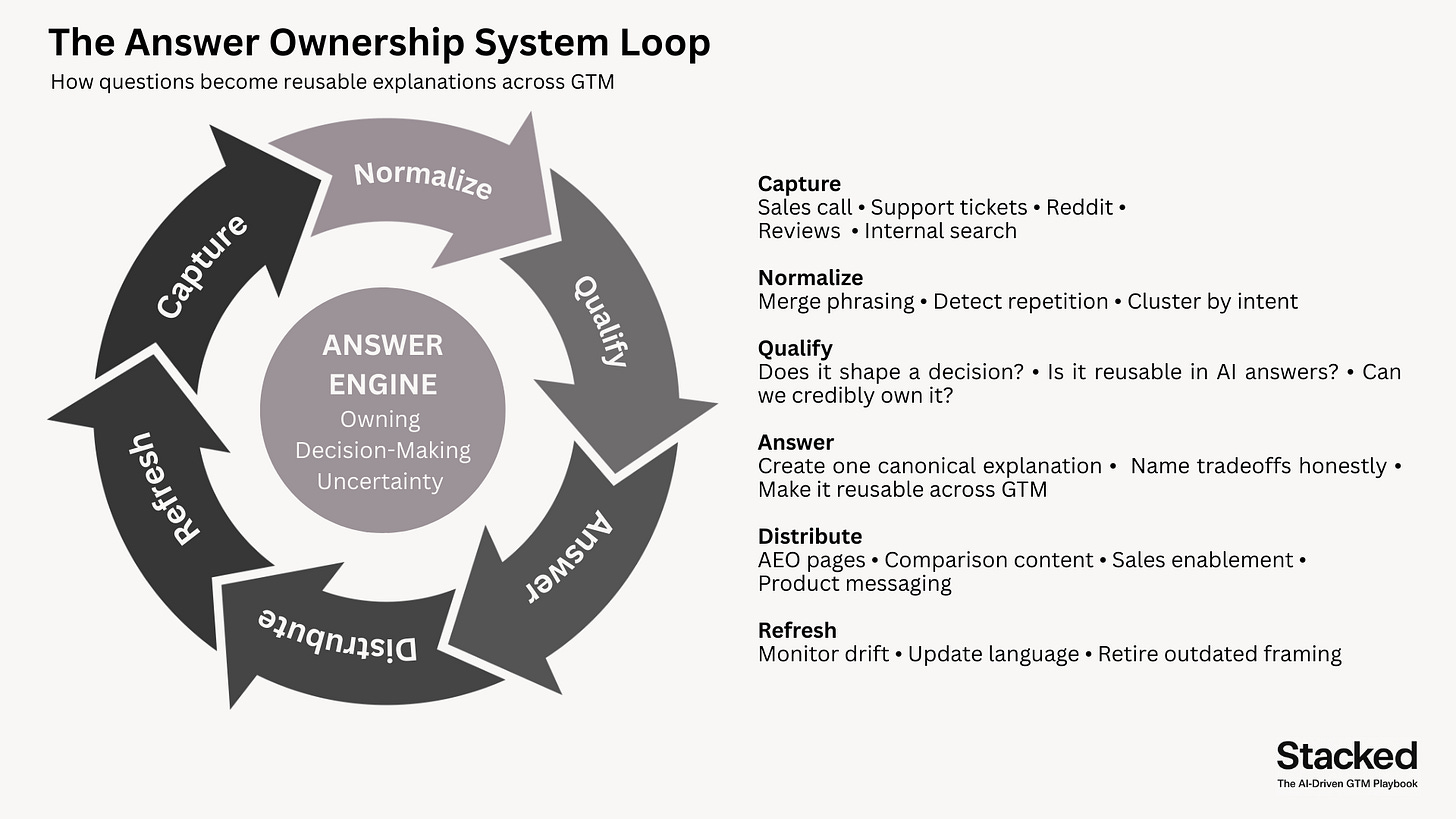

I’ve been referring to this recently as the Answer Ownership System.

The Answer Ownership System:

Capture real questions from live sources

Normalize phrasing and detect recurring uncertainty

Qualify which questions actually shape decisions

Turn those into canonical, reusable explanations

Distribute those explanations across every surface

Refresh them as language and context evolve

When this loop runs continuously, explanation becomes infrastructure.

Sales friction drops.

Positioning sharpens.

AI visibility compounds.

Customer confusion decreases.

This is not a content calendar.

It is a clarity engine.

Validation: Elite Teams Already Run This Loop

I pressure-tested the Answer Ownership System with Ethan Smith (CEO of Graphite), one of the operators I trust most in AI search and modern GTM.

He went back to the research and surfaced examples of category leaders executing each step. Different industries, same structure.

Capture: Pull real questions from live sources

Elite teams treat questions as first-party data. They extract them from sales calls, support tickets, reviews, and community threads.

Examples

Gong turns sales conversations into a living source of objections and buyer language (Gong Labs is the externalized version of this).

HubSpot tracks knowledge base searches, support tickets, and community questions to identify content gaps.

Impact

Gong turned objection mining into a scalable content and narrative engine.

HubSpot uses search analytics to surface unanswered self-serve questions, then builds content to close those gaps.

Normalize: Merge different phrasings into shared uncertainty

Most teams drown in duplicates. Elite teams collapse messy phrasing into clean, reusable intent.

Examples

Zapier normalized intent around app-pair workflows, not “automation software.”

Canva normalized design uncertainty into template-driven intent systems.

Impact

Zapier scaled to 70,000+ integration pages and drives roughly 6M+ monthly organic visits.

Canva built a template ecosystem at massive scale and drives 38M+ monthly organic visits.

Qualify: Decide which questions actually shape decisions

Not every question deserves investment. Elite teams prioritize decision-weighted uncertainty, not curiosity.

Examples

Stripe prioritizes developer uncertainty because unclear answers lose adoption.

Sheridan’s Big 5 (pricing, problems, comparisons, reviews, best-of) is a qualification lens built around decision-making moments.

Impact

Stripe’s documentation functions as an adoption engine and trust moat because it resolves high-stakes uncertainty.

Teams using Big 5-style transparency consistently reduce friction in evaluation and internal buy-in.

Answer: Create canonical explanations that can be reused

Elite teams don’t write “content.” They create canonical answers that become the reference point.

Examples

Stripe has a canonical documentation architecture that anchors explanation.

Ahrefs creates blog posts that naturally demo the product inside the answer (e.g., long-tail keyword research tutorials using Keywords Explorer).

Impact

Stripe becomes the default reference in payments conversations because the explanation is consistent and reliable.

Ahrefs key principal is the canonical answer includes the product as part of solving the problem

Distribute: Push the same answers across every surface

Elite teams don’t let answers live in one channel. They distribute explanation across every GTM surface where decisions happen.

Examples

Zapier distributes canonical answers across integration pages, product education, and workflows.

G2 distributes explanation via comparison and category pages.

Canva distributes explanation through template landing pages, search surfaces, and use-case flows.

Impact

Zapier dominates intent-based discovery because answers meet users at the exact moment of need.

G2 owns a large share of “X vs Y” evaluation paths.

Canva’s template system becomes a discovery engine and conversion engine simultaneously.

Refresh: Prevent explanation decay and drift

This is where most teams fail. Elite teams treat answers like infrastructure that needs maintenance.

Examples

Ahrefs runs systematic content refresh and consolidation programs.

Stripe uses versioning and documentation hygiene to keep explanations current.

Impact

Ahrefs has documented traffic recoveries as high as 468% on refreshed and consolidated assets.

Stripe retains trust because users can rely on documentation that stays current as the ecosystem shifts.

2. What a “Good Question” Actually Is

Most teams believe they are doing question mining.

They are collecting questions.

Usually the loud ones. The easy ones. The ones that show up in SEO tools.

That is not a strategy.

In an AEO-aware environment, a good question meets four criteria:

It reflects real decision uncertainty.

AI systems are likely to encounter it across prompts.

A clear answer would meaningfully shape how the category is explained.

Your brand can credibly own that explanation.

If a question does not meet those criteria, it may still be useful.

It is not leverage.

The Volume Trap

Search volume feels objective. It produces numbers and dashboards.

But in AI-driven discovery, volume is often a weak proxy for influence.

The questions that shape AI answers are frequently:

Worded inconsistently

Embedded in objections

Emotional before they are logical

Lower frequency but higher consequence

“How much does this cost?” is common.

“What ends up costing more six months in?” influences decisions.

Here is the uncomfortable truth:

The questions that matter most rarely look impressive in a dashboard. They’re inconsistent, sometimes phrased awkwardly, often buried inside a longer complaint or hesitation. Most of them are low volume. But they’re the questions that make someone pause, and that pause is what actually shapes a decision.

Search volume tells you what people are curious about. It doesn’t tell you what’s holding them back.

If you build around volume alone, you’ll generate traffic. You might even rank well. But if you build around uncertainty, you influence how decisions get made.

In an AI-driven environment, that distinction matters more than ever. AI systems don’t reward popularity. They reward explanations that reduce ambiguity.

High-volume questions help someone understand a category. Low-volume, high-consequence questions help someone choose. And choosing is where leverage lives.

The Practical Filter

Not every question deserves equal investment.

Browser questions help someone learn.

Buyer questions help someone decide.

AI systems privilege explanations that reduce ambiguity and enable choice.

Use this filter:

Does answering this reduce uncertainty?

Would it change how a recommendation is framed?

Would the answer be reusable across multiple prompts?

Can your brand credibly be cited?

If yes, prioritize it.

If not, support it…but do not anchor strategy to it.

The Core Insight

In this environment, question mining is not about curiosity.

It is about identifying the uncertainty that shapes decisions…and becoming the clearest explanation available.

Curiosity builds awareness.

Uncertainty drives influence.

The brands that own uncertainty build the moat.

3. The Question Mining System (Not a One-Off Exercise)

Most teams treat question mining like research.

They do it when traffic dips.

They do it before a big content push.

They do it when someone asks for “fresh ideas.”

Then they stop.

That’s the problem.

In an AEO world, question mining is not a project. It’s a system. If it doesn’t run continuously, it decays. Language shifts. Buyer concerns evolve. AI systems update how they frame answers. Yesterday’s “good question” quietly stops mattering.

Elite teams don’t “do” question mining.

They run it.

The Shift: From Ad Hoc Research to a Living System

Ad hoc question research looks like this:

Someone pulls Reddit threads once a quarter.

Someone skims a few sales calls.

Someone pastes notes into a doc.

Content gets created.

The doc dies.

A real question mining system looks different.

It has defined inputs, a shared backlog, clear ownership, a regular cadence, and feedback loops from performance. Most importantly, it treats questions as infrastructure, not inspiration.

If AEO is about owning answers, question mining is how you decide which answers deserve to exist in the first place.

The Full Question Mining Stack

At a minimum, a functioning system has five layers. Miss one, and things break quietly.

1. Source intake

Questions flow in from everywhere. Reddit, sales calls, support tickets, reviews, internal search, social threads. This is raw, messy, human language. That’s a feature, not a bug.

2. Normalization

The same question shows up phrased ten different ways.

“What’s the downside?”

“What breaks?”

“Is this risky long term?”

They are the same question.

If you don’t normalize, you mistake repetition for novelty.

3. Qualification (the AEO filter)

Not every question matters. You filter for decision proximity, reusability in AI answers, and whether your brand could credibly be cited. This is where judgment matters most.

4. Clustering by intent

Questions don’t live alone. They form gravity wells. You cluster by evaluation, fear, outcome, and process. Not by keywords. Not by topic.

5. Answer mapping

Every qualified cluster maps to a canonical answer, a format (page, section, inline, sales asset), and a primary owner. If a question has no answer owner, it doesn’t exist.

Where Teams Usually Fail

Most breakdowns happen in the middle.

Teams are good at collecting questions.

They are bad at deciding which ones matter.

They are terrible at maintaining momentum.

The result is a pile of “interesting questions” and very few owned answers.

A system fixes this by forcing decisions.

What are we answering next?

What are we ignoring on purpose?

What already has an answer that just needs tightening?

Human Judgment vs. Automation (The Right Split)

Automation is not here to replace thinking. It’s here to prevent blind spots.

At scale, humans struggle with volume, inconsistency, and pattern recognition across sources. This is where tools like AirOps earn their place in the stack.

Used well, automation helps teams aggregate questions across every source that matters, normalize wildly different phrasing without losing intent, surface repeated uncertainty AI systems will encounter, and cluster questions faster than any manual process ever could.

What automation should not do is decide what’s strategically important, define what a “good” question is, or own the final answer.

Humans still do that.

The best teams use automation to widen their field of vision, then apply judgment ruthlessly.

A Simple Operating Cadence (Steal This)

Weekly

New questions flow into a shared backlog.

Obvious duplicates get merged.

One or two new clusters get flagged.

Monthly

Teams review clusters through an AEO lens.

Decide what deserves a canonical answer.

Assign ownership and format.

Quarterly

Review which answers are getting reused in AI.

Update language as questions evolve.

Retire answers that no longer matter.

This is boring. That’s why it works.

The Point of the System

A question mining system doesn’t exist to generate ideas.

It exists to make sure the most important questions never get missed, the same confusion doesn’t resurface every quarter, and your brand steadily becomes the clearest explanation in the category.

In an AI-first discovery world, that’s not a content advantage.

It’s a compounding one.

4. Reddit: Where Unfiltered Buyer Truth Lives

If you want honest questions, don’t start with search tools.

Start with Reddit. This has become my favorite question mining source.

Reddit is where people go when:

They don’t trust marketing

They don’t want to talk to sales

They want answers from people who have already lived with the decision

That’s why Reddit is one of the highest-signal sources for AEO-grade question mining. Not because it’s clean or structured. Because it isn’t.

Reddit doesn’t show you what people say they care about.

It shows you what they’re worried about when no one is watching.

Why Reddit Is So Powerful for AEO

From an AEO perspective, Reddit matters for three reasons:

LLMs actively ingest Reddit as a source of real-world explanation and sentiment

Questions are phrased in natural, emotional language, not SEO language

The same uncertainty shows up repeatedly across threads, even when phrasing changes

If AI systems are trying to explain how something actually works, Reddit is often where that explanation starts.

Step 1: Choose Subreddits by Buyer Maturity (Not Topic)

Most teams get this wrong immediately.

They search for subreddits about their product category. That’s lazy.

Instead, map subreddits to buyer stage.

Early-stage uncertainty:

r/startups

r/Entrepreneur

r/marketing

r/smallbusiness

Mid-stage evaluation:

r/SaaS

r/webdev

r/ProductManagement

r/growthmarketing

Late-stage, post-decision reality:

r/sysadmin

r/devops

r/dataengineering

r/cscareerquestions (for tooling decisions)

You’re not looking for mentions of your brand.

You’re looking for decision stress.

Step 2: Ignore Posts. Mine the Comments.

This is critical.

Post titles are optimized for attention.

Comments are optimized for truth.

The highest-value questions often appear:

As follow-ups

As rebuttals

As “wish I had known this earlier” replies

Buried three levels deep

Example:

Post title:

“Is headless CMS worth it?”

Low signal.

Comment buried in the thread:

“We went headless and marketing couldn’t ship without engineering for six months. How do teams actually avoid that?”

That comment is gold.

That’s an AEO-grade question.

Step 3: Learn to Spot High-Signal Question Patterns

Not all Reddit questions are equal. The best ones fall into a few recognizable patterns.

Rage posts

“This tool is a nightmare. Nothing works the way sales promised.”

These reveal expectation gaps AI systems will try to explain later.

Regret posts

“If I were starting over, I wouldn’t choose X.”

These generate the most reusable answers in AI comparisons.

Comparison threads

“X vs Y vs Z for a team like mine.”

These heavily influence how AI frames tradeoffs.

Quiet fear questions

“I might be missing something here, but…”

These often surface the real blocker.

Train yourself to look for emotion plus uncertainty. That’s where decisions live.

Step 4: Turn Chaos into Structured Signal

This is where most teams give up.

Reddit is noisy. Threads are long. Language is inconsistent. Doing this manually does not scale.

This is where workflow automation matters.

Tools like Gumloop make Reddit mining dramatically easier because they have native Reddit scraping nodes (these nodes are awesome, so easy to use). You can:

Pull posts and comments from specific subreddits

Filter by keywords, sentiment, or post type

Pass raw text into downstream workflows automatically

From there, systems like AirOps become the backbone of the process.

Used together, teams can:

Aggregate Reddit questions alongside sales calls and support tickets

Normalize wildly different phrasing into shared question clusters

Detect repeated uncertainty AI systems will encounter

Flag questions that deserve canonical answers

Automation doesn’t replace judgment.

It makes Reddit usable at scale.

Step 5: Apply the AEO Filter Ruthlessly

Once you’ve surfaced questions, most of them still won’t matter.

Run each Reddit-derived question through this filter:

Is this a real decision someone is trying to make?

Would AI systems reasonably try to answer this?

Does this shape how the category gets explained?

Could our brand credibly be cited here?

If the answer is no, discard it.

If yes, it goes into the backlog.

This is where most teams fail. They keep everything. Elite teams curate aggressively.

A Concrete Example (What This Looks Like in Practice)

I did this firsthand at Webflow when we were trying to understand why our freelancer segment was eroding.

We didn’t start with surveys.

We didn’t start with keyword tools.

We scraped the hell out of every community where freelancers actually hang out.

Reddit threads.

Community forums.

Long comment chains.

Rants.

Regret posts.

Quiet “I might be done with this” conversations.

Not to look for brand mentions.

To look for friction.

Here’s what surfaced over and over, phrased a hundred different ways:

Raw community sentiment:

“Webflow feels insanely powerful, but it’s starting to feel like too much for solo work. Am I just bad at this, or did something change?”

Normalized question:

“Why does Webflow feel more complex for freelancers than it used to?”

Clustered intent:

Fear + evaluation

What mattered wasn’t the wording. It was the uncertainty underneath.

We used those insights to:

Prioritize AEO answers that directly addressed freelancer concerns

Rewrite content to explain tradeoffs more honestly

Shape campaigns around reassurance, not features

Inform positioning decisions across content, SEO, and growth

Those answers didn’t just live on pages.

They showed up in:

AI-generated explanations of Webflow

Comparisons against simpler tools

Conversations before anyone ever talked to sales

That’s the leverage.

The Real Value of Reddit for AEO

Reddit doesn’t tell you what to write.

It tells you what people are still confused about after reading everything else.

If your answers don’t exist there, AI systems will borrow explanations from someone else who took the time to listen.

Reddit is messy.

Emotional.

Hard to mine.

That’s exactly why it works.

5. Sales Calls & Gong: Revenue-Critical Questions

If Reddit shows you raw buyer anxiety, sales calls show you where deals actually stall.

This is the highest-leverage question source most marketing teams underuse. Not because the data is not there. Because it feels uncomfortable. Sales questions expose confusion, hesitation, and mistrust in real time.

From an AEO lens, that is gold.

Sales calls are where questions stop being theoretical and start being expensive.

Why Sales Questions Matter More Than Any Other Source

Every sales question has three properties that make it uniquely powerful for AEO:

The buyer is already qualified

The question appears right next to a decision

The answer directly affects revenue

If an AI system is trying to explain whether a product is worth it, sales objections are often the clearest articulation of what actually matters.

This is why sales calls should feed your AEO strategy continuously, not occasionally.

Pre-Price vs. Post-Price Questions

This Split Is Everything

One of the most important distinctions when mining sales calls is when the question appears.

Pre-price questions sound like curiosity.

They are about fit, capability, and confidence.

Examples:

How much setup does this usually take?

What kinds of teams struggle with this?

Where do people underestimate the effort?

These questions determine whether a buyer will even accept the price later.

Post-price questions sound like objections.

They are about justification, risk, and internal alignment.

Examples:

Why is this more expensive than X?

What happens if we do not fully adopt it?

How do teams justify this internally?

AI systems tend to mirror this structure when explaining tradeoffs. If your content only answers one side, the explanation is incomplete.

This is where a revenue platform like Gong becomes powerful. Gong allows teams to analyze themes based on deal stages as defined in their CRM. You are not just analyzing questions. You are analyzing when they surface in the buying journey.

That same segmentation logic can be applied across industry, account size, region, or customer segment. Different revenue ecosystems generate different question patterns. Mining those patterns produces more precise answers.

This matters for AEO because LLMs aim to deliver contextually relevant responses. The more clearly your site reflects the unique pain points of specific personas or segments, the more likely your brand is to appear in answers tailored to that exact user prompt.

Elite AEO teams mine both pre-price and post-price questions intentionally.

Objections Disguised as Curiosity

The most valuable sales questions rarely sound hostile.

They sound polite.

How scalable is this?

Is this overkill for a team like ours?

What do companies regret not thinking about first?

These are not informational questions.

They are risk probes.

If your content answers the surface question but ignores the underlying fear, the answer will not stick. And AI systems will not reuse it.

Sales calls teach you how buyers actually phrase doubt. That language is exactly what AEO answers need to reflect.

A quick note of appreciation to Gong Labs here. Their first-party research reinforces just how often objections are disguised as curiosity. In their analysis of real sales conversations, buyers rarely present resistance directly. Instead, they surface concerns as exploratory questions tied to implementation risk, scalability, internal buy-in, or long-term uncertainty. Their work on handling objections with AI and tools like AI Theme Spotter highlights how consistently this pattern appears across industries and deal types.

The question may sound casual. The revenue impact is not.

Enterprise-Only Questions

Do Not Ignore These

Enterprise questions are easy to dismiss because they do not show up at volume.

That is a mistake.

Enterprise buyers ask questions like:

How does this break at scale?

What governance models actually work?

Where does this fall down in complex organizations?

These questions heavily influence how AI frames whether a product is enterprise-ready or SMB-only.

If your brand never answers them publicly, AI systems will infer the answer anyway. Usually not in your favor.

How to Mine Sales Calls at Scale

Without Burning the Team

This does not require manual listening marathons.

With Gong, you already have:

Transcripts

Objection tags

Topic frequency over time

Stage segmentation tied to CRM definitions

Layer in workflow automation and this becomes even more powerful.

Teams now use workflow tools to:

Pull transcripts automatically

Extract question-shaped sentences

Group questions by stage, deal size, or outcome

Feed those questions into structured systems

It is worth making an important distinction here. In theory, you could paste transcripts into a generic LLM and ask it to extract themes. In practice, that is not the same as using a purpose-built AI platform trained on millions of revenue interactions. Platforms like Gong (with their native agents) are optimized specifically for sales and customer conversations. Pattern detection becomes far more precise because it is grounded in first-party revenue data, not just prompt interpretation.

From there, AirOps becomes the control plane.

Sales phrasing becomes canonical answers.

Those answers get distributed across GTM surfaces.

The system stays consistent instead of fragmenting into one-off assets.

When transcripts include rich metadata such as stage, segment, win or loss, and objection tags, the prioritization becomes even sharper. AirOps can separate curiosity from risk probes and produce a ranked backlog of revenue-blocking questions.

Automation handles the scale.

Humans decide what matters.

What This Looked Like in the Real World

I saw this clearly while advising a B2B2C healthcare AI company selling into mid-market and enterprise teams.

Deals were not dying early.

They were stalling late.

Pipeline was strong. Demos landed. Pricing was not the issue. But once buyers entered serious evaluation, momentum slowed.

So we mined sales calls. Not to coach reps. To extract questions.

One pattern surfaced constantly:

Is this actually built for teams our size?

How many customers like us are really successful with this?

Are we going to outgrow this in a year?

None of these sounded confrontational. All of them signaled positioning uncertainty.

We normalized them into a single AEO-grade question:

Who is this product actually built for, and where does it break down?

That question became the spine.

We used it to:

Create AEO content explaining ideal fit and non-fit

Rewrite comparison pages to lead with tradeoffs

Shape campaign messaging around clarity

Align sales on a consistent answer

What changed was not volume.

It was understanding.

That answer started appearing:

In AI-generated category explanations

In third-party comparisons

In buyer conversations before sales ever got involved

Prospects arrived better informed.

Late-stage objections dropped.

Sales cycles shortened.

That is the leverage.

Do Not Stop at Prospect Calls

Question mining should not end with new business.

Conversations with existing customers surface implementation friction, adoption challenges, and retention risks. These questions often predict churn long before cancellation.

Retention and expansion are revenue metrics.

Existing customers are also using LLMs to answer their questions. If your brand does not have a clear explanation publicly available, someone else’s framing fills that gap.

Sales call mining is not just about closing new business. It is about shaping explanation across the entire customer lifecycle.

Beyond AEO

The Organizational ROI

Independent of AEO, publishing answers based on real prospect and customer questions drives measurable ROI.

It shortens sales cycles.

It reduces repetitive objections.

It improves onboarding clarity.

It creates a feedback loop for product teams.

For product marketing, this is extremely attractive. The ROI is not theoretical. It is tied directly to revenue efficiency and customer clarity.

Question mining becomes easier to fund when it is positioned as cross-functional infrastructure, not just SEO or AI visibility work.

AEO is one outcome.

Revenue efficiency is another.

It is a many-birds-one-stone strategy.

The AEO Takeaway

Sales calls do not just tell you what to fix in the funnel.

They tell you what AI systems will struggle to explain unless you do it first.

If the same question keeps stalling deals, it deserves:

A clear answer

A canonical explanation

Ownership across content, positioning, and sales

Because in AEO, revenue-blocking questions are the highest-priority questions you can mine.

6. Customer Support & Tickets: Post-Purchase Reality

If sales calls show you where deals stall, support tickets show you where expectations break.

This is post-purchase truth.

Support questions are not hypothetical. They come from people who already chose you, already paid, and are now trying to make the decision feel right.

From an AEO perspective, that makes them incredibly valuable.

Support tickets reveal the gap between what buyers thought they were buying and what they’re actually experiencing.

That gap is where churn lives.

Why Support Questions Matter So Much for AEO

Support questions have four properties that make them uniquely powerful:

The buyer has already committed

The question appears right after reality hits

The confusion is expensive

The language is painfully honest

AI systems care deeply about this kind of signal. When they explain a product, they often borrow from the same misunderstandings real users surface after purchase.

If you don’t answer these questions publicly, AI systems will infer the answers anyway. Usually from other users, forums, or competitors.

Expectation Gaps vs. Simple How-To Confusion

Not every support question is an AEO opportunity.

The key is separating how-to confusion from expectation failure.

How-to questions sound like this:

“Where do I click to do X?”

“How do I turn this setting on?”

“Is there a doc for this?”

These matter for UX and docs. They are not AEO-critical.

Expectation gap questions sound different:

“I thought this would do X automatically”

“Why is this more manual than I expected?”

“Do most customers run into this?”

These are positioning problems disguised as support tickets.

Those are gold.

Onboarding Questions That Quietly Predict Churn

Some questions show up early and then disappear.

Others show up early and never go away.

Pay attention to questions that:

Appear in the first 30 days

Show up repeatedly across customers

Resurface right before churn or downgrade

Examples:

“Is this the intended workflow?”

“Am I using this wrong, or is this just how it works?”

“What does a successful setup actually look like?”

These are not beginner questions. They are confidence questions.

If users never get clarity here, they don’t complain. They leave.

Feature Confusion vs. Positioning Failure

A simple rule:

If many customers misunderstand the same thing, it’s not a feature problem. It’s a positioning problem.

Support tickets are where that truth shows up first.

When you see questions like:

“Why can’t this do X like Y?”

“Is this missing, or am I missing something?”

“Why does this feel more complex than advertised?”

That’s not a roadmap issue. That’s an explanation issue.

And explanation issues are prime AEO opportunities.

Why Support Questions Convert Insanely Well

This surprises teams.

Content built from support questions often converts better than top-of-funnel content because it:

Meets buyers at a moment of clarity

Preempts regret

Builds trust through honesty

Reduces post-purchase anxiety

From an AEO standpoint, these answers also get reused heavily.

AI systems love explanations that say:

“Here’s what this does, what it doesn’t do, and what most people misunderstand.”

That language comes straight from support.

How to Mine Support Questions at Scale

This does not require ticket-by-ticket review.

Modern workflows make this manageable.

Support systems already tag:

Ticket topics

Time to resolution

Churn-adjacent conversations

With workflow automation, teams can:

Pull ticket transcripts automatically

Extract question-shaped language

Group repeated confusion across accounts

From there, AirOps helps teams:

Normalize how different users phrase the same misunderstanding

Cluster questions by intent and lifecycle stage

Identify which gaps deserve public AEO answers

Track when explanations reduce repeat tickets

Support, content, and AEO stop operating in silos.

What This Looks Like in Practice

A recurring support question:

“Why do I still need to do this manually?”

Normalized AEO question:

“What does this product automate vs. require ongoing work?”

Intent cluster:

Expectation gap + outcome

That answer becomes:

A public AEO explanation

An onboarding asset

A sales enablement reference

A churn prevention lever

One answer. Multiple surfaces.

The AEO Takeaway

Support tickets are not just a cost center.

They are a live feed of unanswered questions that buyers and AI systems are both trying to resolve.

If the same confusion keeps showing up after purchase, it deserves a clear, public answer.

Because in an AEO world, the fastest way to lose trust isn’t bad marketing.

It’s unmet expectations.

7. Social (X, LinkedIn): Ambient Curiosity at Scale

Search shows you what people ask when they’re intentional.

Social shows you what people wonder about when they’re scrolling.

That difference matters.

X and LinkedIn are not where buyers go to research. They’re where confusion, half-formed opinions, and misconceptions surface in public. That makes social one of the best sources for early-stage AEO signals, if you know how to mine it without getting distracted by engagement.

Social isn’t about demand capture.

It’s about demand formation.

And AI systems watch it closely.

Why Social Matters for AEO (Even If You Hate Social)

From an AEO lens, social is valuable because:

People ask questions they wouldn’t type into search

Misunderstandings spread faster than corrections

The same confusion shows up repeatedly in comment threads

AI systems absorb the dominant explanations over time

If Reddit shows you raw buyer truth, social shows you ambient confusion at scale.

That confusion eventually turns into search queries, sales objections, and support tickets.

This is upstream signal.

Comment Threads Are Live FAQ Testing

Ignore the post. Read the comments.

The real questions rarely appear in the main post. They show up underneath it, often phrased as pushback or clarification.

Examples:

“Wait, does this mean you can’t do X?”

“How is this different from what we already do?”

“This sounds nice, but what breaks in practice?”

Those are not engagement comments.

They are unanswered FAQs forming in public.

When the same question appears across multiple posts, you’ve found something AEO-grade.

Quote Tweets and Reposts Create Misconception Loops

Quote tweets and reposts are especially dangerous.

Someone paraphrases an idea slightly wrong.

Someone else reacts to that version.

The explanation drifts.

The wrong framing spreads.

Before long, AI systems start borrowing the distorted explanation because it appears more frequently than the original.

This is how brands lose narrative control.

If you see repeated quote tweets saying:

“So this basically means X is dead…”

That’s not a hot take.

That’s a misconception loop forming.

Those loops are high-priority AEO opportunities.

Founder Explanations Gone Wrong (High Signal, Low Ego)

Founder posts are another goldmine.

Not because founders are wrong.

Because explanations often assume too much context.

When a founder explains something and the comments fill with:

“Can you explain this more simply?”

“Who is this actually for?”

“So does this replace X or not?”

That’s not ignorance.

That’s a signal the explanation didn’t land.

Those gaps are exactly what AI systems struggle with later.

Mining founder comment threads is one of the fastest ways to identify where industry language has outpaced understanding.

How to Extract Signal Without Chasing Virality

This is where most teams fail.

They chase likes instead of patterns.

The goal is not to find viral posts. It’s to find repeated confusion.

Here’s how to do it cleanly:

Track recurring questions across multiple posts, not one

Ignore engagement metrics initially

Look for the same question phrased differently

Focus on comments that ask “how,” “why,” or “what does this mean in practice”

One post means nothing.

Five posts saying the same thing means everything.

Making This Scalable (Without Living on X)

You do not need to manually scroll all day.

Workflow automation makes this manageable.

Teams now use tools like Gumloop to:

Pull comments and quote tweets from defined accounts or topics

Extract question-shaped language

Pass that text into downstream analysis automatically

From there, AirOps helps teams:

Normalize phrasing across posts and platforms

Cluster repeated confusion by intent

Flag which misconceptions deserve canonical AEO answers

Track whether explanations improve over time

Automation collects.

Humans decide.

A Concrete Example

Repeated social comments:

“Is this just SEO with AI slapped on?”

“So do we still need content or not?”

“This feels like more work, not less.”

Normalized AEO question:

“How does AEO actually change what teams do day to day?”

Intent cluster:

Evaluation + fear

That answer becomes:

A public explanation AI systems reuse

A positioning clarification

A sales enablement asset

A filter that keeps the wrong buyers out

One ambient question. Multiple surfaces.

The AEO Takeaway

Social doesn’t tell you what people are searching for.

It tells you what they don’t fully understand yet.

If you ignore it, misconceptions spread unchecked.

If you mine it well, you shape the explanation before AI systems do.

That’s leverage at scale.

8. Reviews, G2, and “I Wish I’d Known” Questions

If sales calls show you hesitation and support tickets show you confusion, reviews show you regret.

This is post-decision clarity.

Reviews are not feedback. They’re delayed questions buyers wish they had asked earlier. And that makes them incredibly valuable for AEO, because AI systems lean heavily on post-purchase language when explaining tradeoffs.

This is where truth surfaces without filters.

Reviews Are Just Questions Asked Too Late

Most reviews aren’t statements. They’re answers to unasked questions.

Read them carefully and you’ll see the pattern:

“Great product, but I didn’t realize how much setup was involved”

“Works well once you learn it, but there’s a steep curve”

“Powerful, but probably overkill for smaller teams”

Those are not opinions.

They’re unresolved questions in hindsight.

Each one maps cleanly to a buyer uncertainty that never got addressed clearly enough before purchase.

That’s an AEO opportunity.

Why Reviews Matter So Much for AEO

From an AEO perspective, reviews matter because:

They describe tradeoffs in plain language

They use comparison phrasing buyers actually understand

They reveal expectation gaps, not feature gaps

AI systems treat them as credibility-weighted input

When an AI model explains why someone might or might not choose a product, review language is often the backbone of that explanation.

If your brand doesn’t own those answers, the model will borrow them from reviewers instead.

Regret Signals and Trust Gaps

The most valuable review content isn’t praise or complaints. It’s regret.

Look for phrases like:

“I wish I had known…”

“In hindsight…”

“If you’re considering this, be aware…”

“This is great, but only if…”

These signal trust gaps.

Not deception.

Misalignment.

And misalignment is exactly what AEO content is supposed to fix.

The Comparison Language Buyers Actually Use

Comparison pages written by marketers rarely match how buyers compare tools in reality.

Reviews do.

Buyers say things like:

“This feels more flexible, but slower”

“Cheaper upfront, more expensive over time”

“Better for teams, worse for solo work”

“Powerful once set up, frustrating before that”

That language is gold.

It’s the phrasing AI systems reuse when summarizing pros and cons across tools.

If your comparison content doesn’t reflect this language, it won’t get cited.

How to Mine Reviews Systematically

This is not about reading a few five-star reviews.

You want scale and pattern recognition.

Start with platforms like G2, app marketplaces, and public review sites.

Then focus on:

3–4 star reviews (highest signal)

Negative reviews that still recommend the product

Positive reviews with caveats

Extract:

Repeated “but” statements

Setup and onboarding mentions

Fit and non-fit language

Comparison phrasing

Those are your inputs.

Making This Scalable With Automation

Manually reading reviews doesn’t scale.

Workflow automation fixes that.

Teams use tools like Gumloop to:

Pull reviews automatically by product, category, or competitor

Extract question-shaped or regret-based language

Route that text into structured workflows

From there, AirOps becomes the system of record.

It helps teams:

Normalize wildly different review phrasing

Cluster regret signals by intent

Map recurring gaps to AEO-worthy questions

Track which explanations reduce negative sentiment over time

Automation handles volume.

Humans decide which gaps deserve public answers.

Turning Regret Into Preemptive Answers

Here’s what great teams do next.

They don’t hide the downsides.

They explain them better than anyone else.

Example:

Review language:

“Great tool, but I didn’t realize how much ongoing work it would require.”

Normalized AEO question:

“What does this product actually automate, and what still requires manual effort?”

Intent cluster:

Expectation gap + outcome

That answer becomes:

A public AEO explanation

A comparison page section

A sales enablement asset

A trust-building filter for the right buyers

The goal isn’t to convert everyone.

It’s to convert the right people with eyes open.

Why This Converts So Well

Content built from review-based questions converts insanely well because it:

Mirrors real buyer language

Acknowledges tradeoffs honestly

Prevents regret before it happens

Builds trust faster than polished marketing ever could

AI systems reward that honesty.

Buyers do too.

This matters beyond your own site. Third party sources drive 85 percent of brand mentions in AI generated answers. Offsite narrative is not secondary. It is a primary visibility signal.

When you answer the real questions buyers are asking, you create clearer context that other domains reference. That is how explanation spreads. And that is how visibility compounds.

The AEO Takeaway

Reviews aren’t feedback to be managed.

They’re a backlog of unanswered questions your future buyers are already asking.

If you mine them well and answer them publicly, you don’t just improve conversion.

You train AI systems to explain your product the way you would.

That’s compounding advantage.

9. Internal Search, Chat, & Zero-Result Questions

Internal search isn’t about a search box.

It’s about any place a user types a question on your site expecting you to explain something.

That might be:

A search bar on your marketing site or docs

A help center search

An in-product search or command palette

A chat tool like Drift, Qualified, or Intercom

A demo request or contact form field

Different UI. Same signal.

From an AEO perspective, these are some of the highest-intent questions you’ll ever see.

What This Signal Actually Represents

When someone types a question on your site, they’re not exploring the category.

They’re evaluating you.

They already believe:

You might be the right solution

You should have an answer

Clarification will help them decide

This is self-reported intent at the moment of friction.

Keyword tools guess.

Internal questions tell you directly.

What a “Zero-Result” Question Really Means

A zero-result question doesn’t always look like “No results found.”

It looks like:

A search that returns irrelevant pages

A chat question that triggers a handoff to sales

A repeated “Can you clarify…” message

A demo form that includes confusion in the free-text field

In every case, the meaning is the same:

A user expected an explanation.

You didn’t give one.

That gap creates doubt. And doubt doesn’t stay internal. Users take it to AI systems, Reddit, or competitors.

Why Chat Tools Often Beat Search Bars

If a site doesn’t have a search bar, chat tools are often better.

Tools like Drift, Qualified, and Intercom capture:

Natural language questions

Emotional subtext

Urgency and stakes

Repetition across users

Examples:

“Is this overkill for a team our size?”

“How long does setup really take?”

“What do people usually struggle with first?”

That is internal search with context attached.

From an AEO lens, this is gold.

Navigation Gap vs. Messaging Gap (Still Critical)

Not every internal question means “write new content.”

You still need to diagnose the gap.

Navigation gap

The answer exists, but users can’t find it.

Fix UX.

Messaging gap

The answer doesn’t exist or isn’t clear.

Fix the explanation publicly.

How to tell:

If the same question appears across chat, sales, or support, it’s messaging

If it shows up repeatedly over time, it’s messaging

If it only happens once, it might be navigation

Elite teams don’t overreact. They pattern-match.

How Teams Actually Capture This Data

Most teams already have this data.

They just don’t route it anywhere useful.

You can capture internal questions from:

Site search logs

Docs and help center search

Chat transcripts (first question is highest signal)

Demo request and contact form fields

In-product prompts and failed actions

You want:

The raw question text

Frequency

Where it appeared

What happened next

That’s enough to start.

Turning This Into an AEO Backlog

Raw logs aren’t helpful by themselves.

Teams use automation to:

Pull internal questions weekly

Flag repeated or unresolved ones

Deduplicate phrasing

Surface patterns

From there, AirOps helps teams:

Normalize internal questions with sales and support language

Cluster questions by intent

Identify which gaps deserve canonical AEO answers

Track whether new explanations reduce repeat questions over time

This is how internal confusion becomes external clarity.

A Simple Example

Repeated chat question:

“Is this overkill for a small team like ours?”

Normalized AEO question:

“Who is this product best suited for, and who should not use it?”

Intent cluster:

Evaluation + fear

That answer becomes:

A public explanation section

A comparison anchor

A sales alignment asset

An AI-reusable explanation of fit

One internal question.

Multiple downstream wins.

The AEO Takeaway

You don’t need a search bar to mine internal questions.

Anywhere a user types a question expecting clarity counts.

Those questions tell you exactly where your explanation breaks down while intent is highest.

If you answer them clearly and publicly, you don’t just improve conversion.

You prevent AI systems from inventing the answer for you.

10. Clustering Questions into Answer Themes

By this point, you don’t have a question problem.

You have a too-many-questions problem.

Reddit, sales calls, support tickets, chat, reviews, internal search. They all produce valuable signal. But if every question becomes its own piece of content, you end up with sprawl. Dozens of half-answers. No clarity. No reuse.

AEO doesn’t reward volume.

It rewards coherence.

Clustering is how you get there.

Why Grouping by Topic Fails

Most teams cluster questions by topic.

Pricing.

Security.

Integrations.

Features.

That’s comfortable. It’s also wrong.

Topics describe what something is about.

Intent describes why someone is asking.

AI systems don’t care that ten questions mention “pricing.” They care whether those questions are about fear, evaluation, justification, or process.

If you cluster by topic, you create pages that talk around decisions.

If you cluster by intent, you create answers that resolve them.

Group by Intent, Not Keywords

At scale, many questions that look different are actually the same.

Examples:

“Is this overkill for us?”

“Are we too small for this?”

“Is this only for enterprise teams?”

Different wording. Same intent.

That intent is fit anxiety.

From an AEO lens, those should not be three answers. They should be one clear, canonical explanation of who the product is for and who it is not for.

This is where teams usually overproduce and under-explain.

The Four Intent Buckets That Matter Most

Almost every high-value question you’ve mined can be clustered into one of four answer themes:

Evaluation

Is this right for me?

Fear

What could go wrong?

Outcome

What actually changes if this works?

Process

What does this look like in practice?

You’re not eliminating nuance.

You’re organizing it.

Each cluster should lead to a single, durable answer that can be reused everywhere.

Identifying the Canonical Answer

A canonical answer is not a page.

It’s the clearest explanation you have for a recurring uncertainty.

You know you’ve found one when:

The same explanation satisfies multiple questions

Sales, support, and content all point to it

AI systems can reuse it without rewriting

The goal is not to answer every question individually.

The goal is to create a small number of answers so good they absorb dozens of questions.

That’s how AEO compounds.

What Deserves a Page vs. a Section vs. an Inline Answer

This is one of the most important judgment calls in the entire system.

Here’s a simple rule set that works.

Deserves a dedicated page when:

The question shapes how the category is explained

It appears across multiple sources

It influences evaluation or comparison

AI systems frequently need to reference it

Examples:

Who this product is for (and not for)

Pricing philosophy and tradeoffs

Core architectural decisions

Deserves a section when:

The question is important but secondary

It supports a larger explanation

It resolves a specific fear or objection

Examples:

“Is this overkill?”

“What happens if we don’t fully adopt it?”

Deserves an inline answer when:

The question is narrow

It removes momentary friction

It doesn’t change the overall decision

Examples:

Minor feature clarifications

Setup edge cases

This discipline prevents content bloat and keeps explanations tight.

How Automation Helps Without Flattening Thinking

Clustering is where automation actually shines.

Humans are great at judgment.

They’re bad at pattern recognition across thousands of inputs.

This is where AirOps becomes foundational.

AirOps helps teams:

Normalize different phrasings of the same question

Detect recurring uncertainty across sources

Surface high-signal opportunities

Suggest intent-based clusters

Keep clusters current as language evolves

AirOps does not replace judgment. It strengthens it.

Humans still decide:

Which intent clusters are strategic

Which deserve ownership

Which to intentionally ignore

Automation accelerates insight.

Judgment creates advantage.

A Concrete Example

Raw questions:

“Is this too complex for a small team?”

“Do we need engineers full time?”

“Is this only worth it at scale?”

Single intent cluster:

Evaluation + fear

Canonical answer:

“Who this product is built for, where it shines, and where it’s the wrong choice.”

That one answer now:

Anchors a core page

Feeds sales and onboarding

Shapes AI explanations

Absorbs dozens of individual questions

That’s leverage.

The AEO Takeaway

Clustering is not about organizing content.

It’s about reducing cognitive load for humans and machines.

When you cluster by intent, create canonical answers, and choose the right surface, you stop publishing content and start building an answer engine.

That’s when AEO becomes durable.

11. Turning Questions into an Answer Engine

By now, you don’t have a research problem.

You have clarity.

You know which questions matter. You’ve clustered them by intent. You’ve identified canonical answers. The mistake most teams make at this point is treating answers like content assets.

That’s not the job.

The job is to turn answers into an engine. Something that shows up everywhere buyers and AI systems need it, without rewriting the same explanation ten different ways.

An answer engine doesn’t live in one place.

It runs across the entire go-to-market surface area.

Start With the Canonical Answer (Always)

Every important question should have one primary answer.

Not five versions. Not a blog post plus a deck plus a help doc that all say slightly different things.

One clear explanation that:

Names the tradeoffs

Resolves the uncertainty

Can be reused without losing meaning

Everything else is a derivative.

This is the single biggest unlock for AEO. AI systems reward consistency. Humans trust it too.

FAQ Hubs, AEO Pages, and Embedded Answers

Most teams default to FAQs. That’s fine, but only if they’re treated correctly.

FAQ hubs work when:

Questions are clustered by intent

Answers are opinionated, not evasive

Each answer could stand alone in an AI response

Bad FAQs list questions.

Good FAQs resolve decisions.

For higher-impact questions, graduate them out of FAQs.

Create AEO pages when:

The question shapes how the category is explained

Buyers ask it repeatedly across sources

AI systems need a stable reference

Examples:

Who this product is for and not for

Pricing philosophy and tradeoffs

Core architectural decisions

Then embed answers everywhere else.

Inline answers on product pages.

Short explanations near CTAs.

Clarifying sections where confusion tends to spike.

The same answer. Different surfaces.

Comparison and Alternatives Content (Where AEO Wins Big)

Comparison content is where answer engines shine.

Most comparison pages are defensive. They list features. They avoid tradeoffs. AI systems see through that immediately.

High-performing AEO comparison content does three things:

Explains why teams choose each option

Names the downsides honestly

Helps the buyer self-select

That language often comes directly from:

Sales objections

Review regret

Reddit comparisons

If your canonical answers already exist, comparison content becomes assembly, not invention.

This is why teams with strong answer engines dominate AI comparisons even without ranking first.

Sales Enablement and Objection Handling

If sales is answering a question live, that answer should already exist publicly.

Every repeated objection is a signal:

Either the answer doesn’t exist

Or it exists but isn’t clear enough

Great teams do this:

Identify the top 10 revenue-blocking questions

Create canonical AEO answers for each

Train sales to reference, not reinvent

This does two things:

Sales stays consistent

AI systems learn a single explanation instead of ten variations

Sales enablement and AEO are the same work. They just operate at different moments.

In-Product Education (The Most Overlooked Surface)

The best answer engines don’t stop at the website.

They show up in-product.

Think:

Onboarding explanations

Tooltips that explain tradeoffs, not just actions

“Why this works this way” moments

When users understand why something behaves a certain way, support tickets drop and trust increases.

Those explanations often become the exact phrasing AI systems reuse later.

Post-purchase clarity feeds pre-purchase understanding.

Keeping the Engine Alive

An answer engine only works if it stays current.

Language shifts. Buyer expectations evolve. New questions emerge.

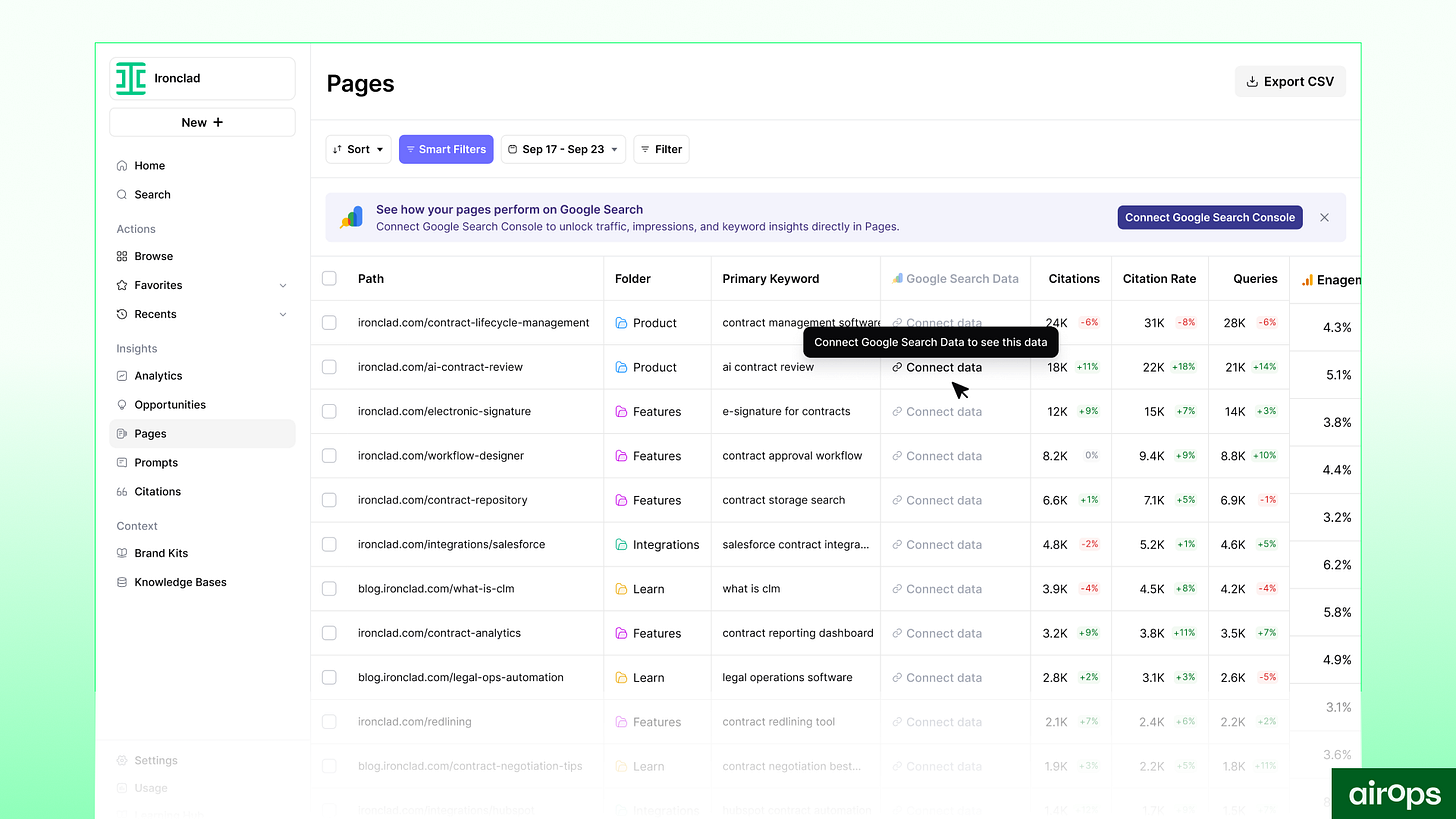

I truly have been enjoying AirOps lately, and getting deeper into using it to build infrastructure. AirOps isn’t just for monitoring. It’s the execution layer that turns question signals into a prioritized backlog, ships canonical answers across surfaces, and keeps those answers current as language drifts.

Think of AirOps as answer-maintenance infrastructure: it detects drift, flags which canonical answers need attention, and helps teams update once and propagate everywhere.

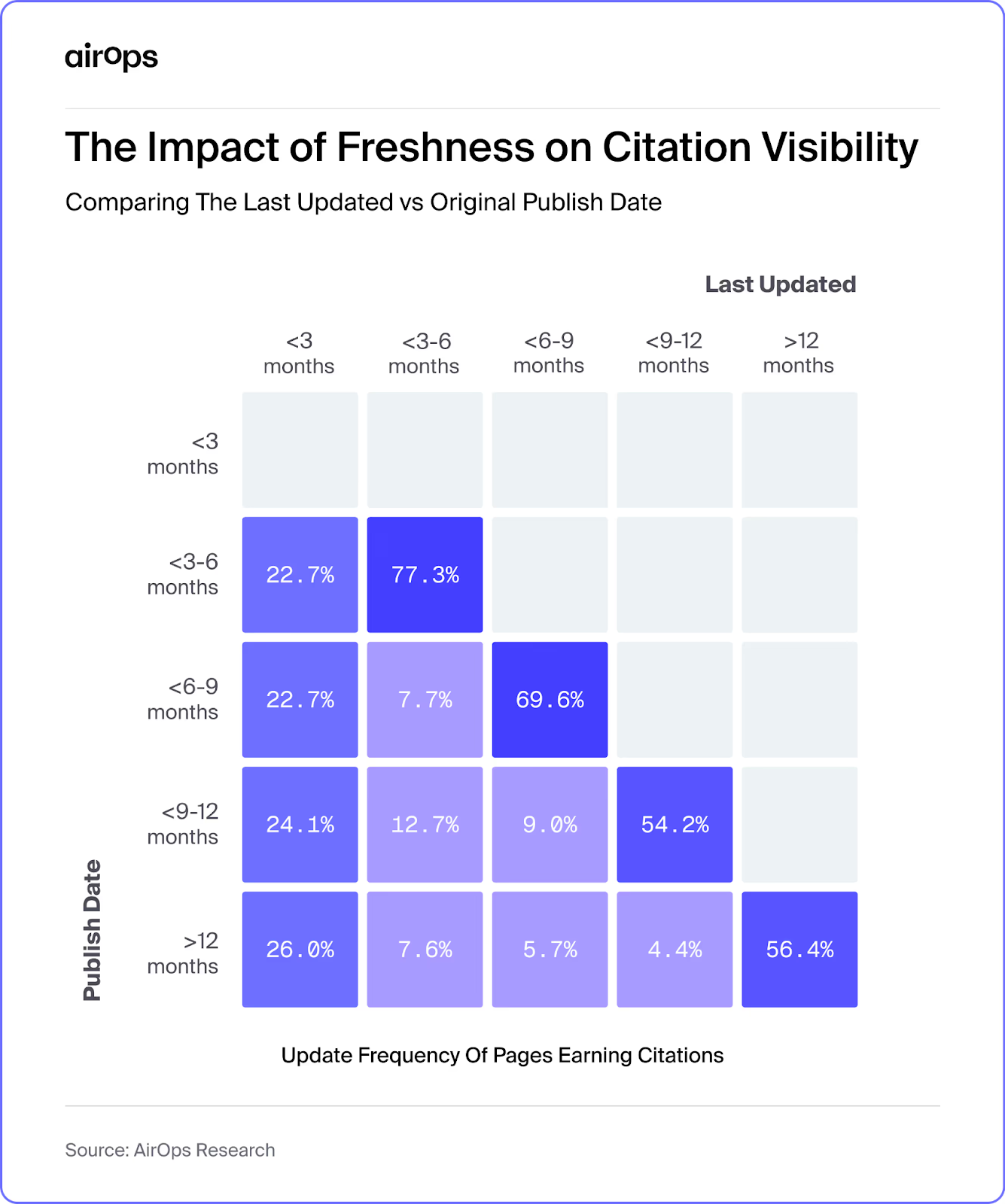

There is real data behind this. Across AirOps analysis, content refreshed within the last quarter is roughly three times more likely to be cited in AI generated answers than older content.

Reference: The Silent Pipeline Killer: How Stale Content Costs You AI Citations (and Customers)

AI systems do not just look for authority. They look for clarity that reflects current language. If your explanations lag behind how buyers are asking questions today, citations quietly shift elsewhere.

AirOps helps teams:

Track which questions are rising or fading

Refresh canonical answers as language changes

Ensure consistency across pages, FAQs, sales assets, and product

Prevent explanation drift over time

Humans still own the answers.

AirOps keeps the system from breaking.

A Simple Mental Model

If a question matters:

It has one canonical answer

That answer appears everywhere it’s needed

AI systems can reuse it cleanly

Humans don’t have to reinterpret it each time

That’s an answer engine.

Not content velocity.

Not SEO hacks.

Not clever formatting.

Clarity, repeated consistently.

The AEO Takeaway

Turning questions into an answer engine is how AEO compounds.

Instead of publishing more, you explain better.

Instead of chasing rankings, you shape understanding.

Instead of reacting to AI, you train it.

That’s how elite teams stop playing catch-up and start owning the narrative.

12. Operationalizing Question Mining (So It Actually Runs)

Most teams don’t fail at question mining because they lack insight.

They fail because nothing owns it.

No cadence.

No backlog.

No decision rights.

No follow-through.

The highest-signal inputs usually come from live sources that capture true user behavior: qualified chat like Intercom, demo/contact forms, on-site search logs, support tickets, sales transcripts, plus demand/behavior signals from Google Search Console and Google Analytics 4…and freshness/context from your CMS.

Question mining only works if it becomes a rhythm, not a brainstorm. Something lightweight, repeatable, and boring enough to survive real workloads.

This is where something like Page360 from AirOps becomes critical. It brings together GA4 engagement, Search Console demand, and AI search citations at the page level so prioritization stops being opinion driven and becomes an evidence-backed weekly queue.

Instead of arguing about what to update next, teams can see which canonical answers are underperforming relative to demand and act with confidence.

The Weekly Question Review Cadence

If question mining isn’t reviewed weekly, it’s already falling behind.

Not a big meeting.

Not a presentation.

A short, disciplined review.

The goal of the weekly cadence is simple:

What new questions surfaced?

Which ones matter?

What are we doing about them?

A strong weekly review looks like:

20–30 minutes

Same attendees

Same structure every time

Agenda:

New questions added since last week

Repeated questions gaining momentum

One decision: promote, park, or ignore

No ideation.

No content planning.

Just triage.

This keeps the backlog honest and prevents everything from feeling “important.”

The Shared Question Backlog (Single Source of Truth)

Every question lives in one place.

Not Slack threads.

Not Google Docs.

Not someone’s notes.

A shared backlog includes:

Raw question text

Source (sales, Reddit, support, chat, etc.)

Frequency or confidence level

Intent cluster

Current status (new, clustered, answered)

This backlog is not owned by content.

It’s owned by the system.

In practice, “every surface” isn’t abstract. It means your CMS (Webflow, Shopify, etc.) plus the backlog tools where real work gets managed…Notion, Airtable, Asana, Slack, etc.

The system doesn’t replace them. It feeds them.

Questions get clustered and prioritized centrally, then routed into the workflows your team already runs. Publishing happens in your CMS. Execution lives in your task systems. AirOps sits between signal and action…turning ambiguity into a clear, shippable queue.

If a question isn’t in the backlog, it doesn’t exist.

If it’s answered, it gets marked as such.

If it keeps resurfacing, that’s a signal the answer isn’t working yet.

This is how you avoid solving the same problem five times a year.

The Ownership Model (This Is Critical)

Question mining dies when everyone is “involved” and no one is accountable.

The cleanest model looks like this:

PMM owns the question quality

What questions matter

How they’re framed

Whether the answer resolves the real uncertainty

Growth owns the surfaces

Where answers live

How they show up across site, AEO pages, comparisons

Whether answers actually get seen

Sales owns escalation

Which questions block deals

Which objections keep resurfacing

When positioning breaks down live

Support feeds signal.

Content executes.

But PMM and Growth are the spine.

This alignment is what turns AEO from “content strategy” into GTM infrastructure.

Automation as Leverage, Not Replacement

Automation is not here to decide what matters.

It’s here to make sure you don’t miss it.

At scale, questions come from everywhere:

Sales transcripts

Support tickets

Chat tools

Reviews

Internal search

Social threads

Manually tracking that is impossible.

This is where AirOps becomes foundational.

AirOps helps teams:

Aggregate questions across sources automatically

Normalize phrasing without losing intent

Detect repeated uncertainty early

Keep clusters fresh as language evolves

What AirOps does not do:

Decide which questions deserve ownership

Write positioning

Replace judgment

Humans decide.

Automation makes it survivable.

If this still feels abstract, here is what it looks like in practice.

Eoin Clancy from AirOps recently did an awesome demo with Dave Gerhardt of Exit Five (check it out here at 21:23) that shows how teams turn fresh sales transcripts from the last 90 days into a prioritized GTM question backlog, then generate brief-ready outputs with human review.

The workflow pulls recent transcripts and metadata, extracts both explicit and implicit questions, overlays them with quantitative demand signals, and outputs a ranked list of bottom-of-funnel topics. Humans stay in control of approvals and positioning. The system handles the scale.

This is the missing bridge most teams never build. Sales language becomes structured signal. Structured signal becomes an executable backlog.

How This Actually Feels When It’s Working

When question mining is operationalized properly:

Sales stops improvising answers

Content feels calmer and more focused

PMM stops reacting late

AI explanations start sounding more like you

Fewer “why are we still getting this question?” moments

The system absorbs chaos so people don’t have to.

A Simple Rule That Keeps It Alive

If a question:

Shows up in more than one source

Affects a buying or adoption decision

Keeps resurfacing after being “answered”

It deserves attention.

No debate.

No backlog guilt.

Just action.

The AEO Takeaway

Question mining doesn’t fail because teams don’t care.

It fails because it’s treated like a task instead of a system.

When you give it a cadence, a backlog, clear ownership, and automation as leverage, it becomes self-sustaining.

That’s when AEO stops being a project and starts being a competitive advantage.

13. Where Automation and AI Multiply Impact

Up to this point, everything in this guide could be done manually.

You could read the threads.

Listen to the calls.

Review the tickets.

Scan the chats.

It would even work for a while.

Then volume hits. Language shifts. New objections appear. AI systems change how they frame answers. The system that felt manageable becomes brittle.

That’s the moment automation stops being optional.

Not to replace thinking.

To keep thinking effective at scale.

What Automation Is Actually Good At (and Humans Aren’t)

Humans are excellent at judgment.

They’re terrible at repetition.

Automation shines in four places that quietly matter for AEO.

Aggregation at Scale

Questions don’t come from one place. They come from sales calls, support tickets, chat tools, reviews, internal search, Reddit, and social threads.

Workflow automation tools like Gumloop or n8n handle this first layer. They pull transcripts, scrape communities, and route raw question data into a single stream.

They don’t decide what matters.

They make sure nothing gets dropped.

Normalization without Flattening Meaning

The same question appears phrased ten different ways:

“Is this overkill?”

“Are we too small?”

“Is this only for enterprise?”

Automation groups those variations so humans can reason about intent instead of drowning in phrasing differences.

Pattern Detection Over Time

Humans notice spikes.

Automation notices drift.

Which questions are quietly increasing?

Which ones stopped appearing?

Which explanations no longer resolve uncertainty?

This matters because AI systems reuse language that persists, not language that spikes once.

Maintenance, Not One-Time Creation

The hardest part of AEO isn’t writing answers. It’s keeping them current as language evolves.

Automation flags when answers need attention. Humans decide what to do.

Where Tools like AirOps Fit in the Stack

Where some tools can help you move data, AirOps helps you manage the end-to-end lifecycle: cluster → prioritize → draft/refresh → publish/sync → measure. All with humans in control of judgment.

If Gumloop or n8n move data, AirOps is where questions turn into answers and answers turn into assets.

AirOps is not just analysis infrastructure.

It’s execution infrastructure.

Used well, AirOps helps teams:

Normalize question language across every source

Cluster questions by decision logic, not keywords

Identify which questions deserve canonical answers

Generate first-draft AEO content from approved clusters

Refresh and rewrite answers as buyer language evolves

Maintain consistency across site, comparisons, FAQs, and enablement

Automate updates without explanation drift

This is a critical distinction.

Workflow tools move information.

AirOps creates and maintains the explanation layer.

Clustering → Content → Refresh (The Real Flywheel)

This is what the flywheel actually looks like:

Questions flow in from workflows (sales, support, chat, social)

AirOps clusters them by intent and decision logic

Humans approve which clusters matter

AirOps generates or updates canonical answers

Those answers ship across AEO pages, FAQs, comparisons, messaging, positioning strategy, lifecycle campaigns, sales assets, you name it.

New questions test the explanations

AirOps flags what needs refinement

Creation and refresh are continuous.

This is how answer engines stay alive.

Why This Matters for AEO Specifically

AI systems do not reward:

Static pages

Outdated phrasing

Inconsistent explanations

They reward:

Clear, current answers

Consistent framing across surfaces

Language that matches how buyers actually ask questions now

Automation makes that possible.

Not by guessing.

By reacting faster than humans can alone.

Human-in-the-Loop Is Still Non-Negotiable

The failure mode of automation is outsourcing judgment.

That’s not how elite teams work.

The winning model looks like this:

Workflow tools aggregate and route

AirOps clusters, drafts, refreshes, and maintains

Humans approve priority, framing, and tone

Automation ensures consistency and scale

AI expands your execution capacity.

Humans define truth and strategy.

What This Feels Like When It’s Working

When this layer is set up correctly:

Content stays fresh without rewrites from scratch

The same questions stop resurfacing

Sales hears fewer “can you clarify…” objections

AI explanations stay aligned with your positioning

Teams stop arguing about what to write next

The system absorbs change instead of breaking under it.

The AEO Takeaway

Automation doesn’t replace thinking.

It protects it from scale.

Workflow tools keep the pipes flowing.

AirOps turns questions into answers and keeps them relevant.

That’s how question mining stops being a project and becomes durable advantage.

14. Question Mining → AEO → Revenue

Question mining isn’t a content exercise.

It’s a revenue system.

When teams do this well, the outcome isn’t “better blogs” or “more visibility.” It’s buyers who arrive clearer, decide faster, and trust you earlier than they would have otherwise.

That’s the throughline.

Faster Trust Formation (Before You Ever Show Up)

Trust used to form during the sales process.

Now it forms before the first interaction.

When AI systems answer questions clearly, consistently, and with the right tradeoffs, buyers show up with confidence instead of skepticism. They’ve already internalized how your product works, where it fits, and where it doesn’t.